Can we help you?

Contact us

Can we help you?

Contact us

Thank you for contacting us

Your form has been submitted successfully Our team will contact you again as soon as possible.

Whooppss...!! An error has occurred

Try sending later or write an email directly to areaempresas@ua.es

INFO

SHEET

DOWNLOAD

EXECUTIVE

ABSTRACT

CONTACT DETAILS: Research Results Transfer Office-OTRI

University of Alicante

Tel.: +34 96 590 99 59

Email: areaempresas@ua.es

http://innoua.ua.es

The Robotics and Three-Dimensional Vision (RoViT) research group at the University of Alicante has developed a real-time communication platform between the hearing community and the deaf community using sign language. All this in a simple and bidirectional way through natural language processing techniques and the camera and the screen of a computer or mobile device.

The group is looking for companies interested in acquiring this technology for commercial exploitation or in developing it to adapt it to new needs of the deaf community.

Communication between the hearing community and the deaf community using sign language as a means of communication has always been a serious problem. It is especially complicated in everyday tasks where the presence of an interpreter is not always possible, such as shopping, catering, transport, health care or administrative procedures.

Several automatic Sign Language (SL) recognition systems are currently known but they have some limitations, for example:

• Recognition is sign-by-sign.

• They are not bidirectional communication systems, so that sign-to-text conversion is performed, but not text and/or speech-to-sign conversion is performed.

• They do not include facial keypoints that allow more accurate recognition of sign language by including the analysis of facial expressions and vocalisation of signs.

Therefore, the present invention aims to improve the quality of daily life of millions of people with a novel technology that solves the limitations existing to date.

In order to achieve communication between the hearing community and the deaf community using sign language as a means of communication, it has been necessary to develop two functionalities:

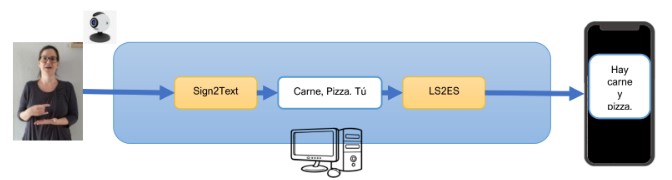

1) Sign to text: On the one hand, the platform recognises and interprets what a user signs in front of a camera, providing a transcription in text and voice (see Figure 1). The system that implements this functionality consists of a camera, which can be of any type, including those cameras integrated in mobile devices and tablets, which will be in charge of capturing the images of a person signing in Sign Language (SL). These images are processed by neural networks to obtain the signed signs, generating a sentence or complete text in SL. This text is the input to a third module in charge of its translation into Oral Language (OL) through a natural language processing (NLP) technique. The generated text is displayed on a screen that can be a computer screen, any mobile device or tablet.

Figure 1. Diagram of the functionality corresponding to the generation of text and speech from signing in front of a camera.

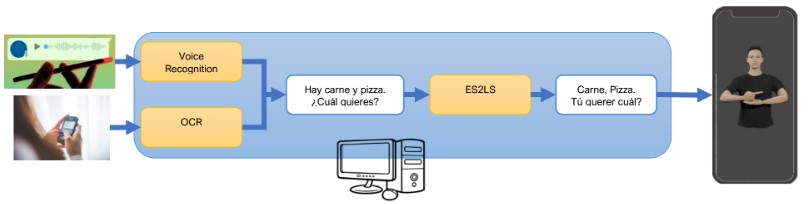

2) From text and/or voice to sign: On the other hand, the platform obtains, from text or a voice message, the corresponding LS transcription and displays it on the screen by means of a virtual avatar (see Figure 2). Thus, in this case, the user will indicate the text he/she wishes to sign by voice, using any microphone or keyboard (including those that allow handwritten text to be typed). Once the text has been obtained, a Natural Language Processing (NLP) technique is used to obtain the text expressed in LSE signs. These signs are signed by a virtual avatar displayed on a screen, whether it is a computer screen, a mobile device or a tablet.

Figure 2: Diagram of the functionality corresponding to the generation of the signing by means of a virtual avatar from text or audio entered by a user.

MAIN ADVANTAGES OF THE TECHNOLOGY

• This system works in real time, in a simple and fast way.

• It is able to recognise complete sentences, unlike other platforms that only offer sign-by-sign recognition.

• To achieve this communication, it is only necessary to have a mobile device with a camera and a screen.

• The accuracy of the system reaches 95%.

INNOVATIVE ASPECTS

• Allows two-way communication, i.e. conversion from sign to text, but also from text and/or speech to sign. Until now only sign-to-text conversion was possible.

• The system includes facial keypoints that allow for more accurate sign language recognition by including the analysis of facial expressions and vocalisation of signs.

The technology is at an advanced stage of development (offering an accuracy of 95%) with a demo. So far, it has been trained with vocabulary especially in the field of catering and health but is intended to be extended to new areas.

This system can be adapted to many areas where deaf people need to communicate without the need for an interpreter, for example: health, financial, administrative, catering, shopping, transport, justice, etc.

It is especially useful in the education sector as it allows communication with a deaf student, thus facilitating learning, by transmitting concepts and knowledge in a way that is understandable to all. More information in: https://www.sign4all.net/

We are looking for companies or entities interested in acquiring this technology for commercial exploitation through patent licensing agreements or for the development of the technology and its adaptation to the specific needs of their activity.

This technology is protected by patent application:

• Patent title: “Sistema y método de comunicación entre personas sordas y personas oyentes”

• Application number: P202430179

• Application date: 12/03/2024

Medicine and Health

Carretera San Vicente del Raspeig s/n - 03690 San Vicente del Raspeig - Alicante

Tel.: (+34) 965 90 9959